Glad to say that my thesis has passed and been accepted by Kings College London, and I am now a Doctor of Philosophy in Multi-agent Systems! For anyone interested in “Agent-based self-organisation for task allocation: reinforcement learning for emergent multi-agent systems”, here is the final work. This brings together research work and new algorithms for tackling task and resource allocation in multi-agent systems using reinforcement learning, as well as the emergence of roles within an agent-based system through self-organisation driven by inter-agent rewards and knowledge exchange. If anyone has any interesting work going on in this or any related areas, feel free to drop me a message.

Glad to say that my thesis has passed and been accepted by Kings College London, and I am now a Doctor of Philosophy in Multi-agent Systems! For anyone interested in “Agent-based self-organisation for task allocation: reinforcement learning for emergent multi-agent systems”, here is the final work. This brings together research work and new algorithms for tackling task and resource allocation in multi-agent systems using reinforcement learning, as well as the emergence of roles within an agent-based system through self-organisation driven by inter-agent rewards and knowledge exchange. If anyone has any interesting work going on in this or any related areas, feel free to drop me a message.

Research highlights

Over the course of our work we will demonstrate new algorithms designed for complex, realistic, multi-agent systems. We show how our algorithms optimise for task and resource allocation in disrupted environments, tackling the various challenges through;

-

dynamic system exploration by policy adaptation using an agent’s historical data to predict how well its performance is in context of the whole system;

-

enhanced recovery of performance after system perturbations by retaining information learnt about the environment;

-

optimisation of resource allocation through learning and balancing the goals of other agents;

-

the emergence of roles and self-organisation resulting from implicit coordination between agents to complete tasks.

Abstract

There are many systems where tasks must be allocated amongst multiple, distributed agents, and where each participant must manage its limited resources to best complete these tasks. In stable environments with low numbers of agents there are algorithms to search for the best task and resource allocations. In these types of systems strategies can be planned, and agents coordinated, in a centralised manner.

In more complex situations, such as where there are large numbers of agents, or the environment is highly dynamic or uncertain, these types of solutions do not perform as well. Many real-world systems however are both complex and subject to environmental perturbations, e.g. wireless sensor networks, the coordination of vehicles in smart cities, and the orchestration of drone swarms. In this thesis, we provide contributions towards the challenges of task and resource allocation in dynamic multi-agent systems. We develop decentralised algorithms that are scalable, that work with an agent’s local knowledge to improve task and resource allocations in order to optimise the utility of a system in precisely these kind of realistic scenarios.

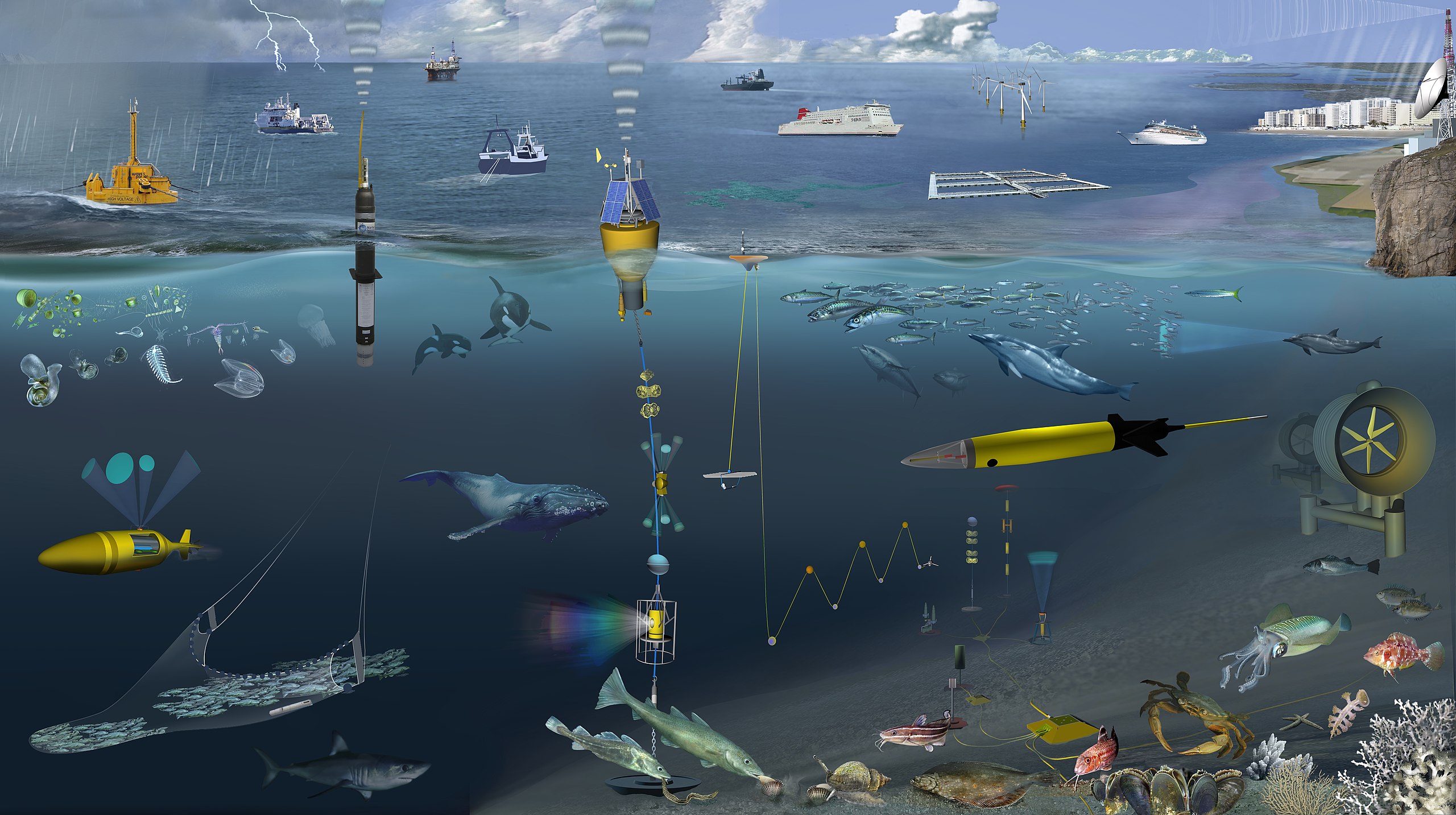

We develop three contributions to cumulatively solve these problems. As a first step, we develop a reinforcement learning based algorithm to optimise the allocation of tasks based on their quality of completion by agents, while adapting the algorithm in response to an agent’s judgement of its historical performance. We next develop an algorithm that allows an agent to allocate its limited resources in such a way as to optimise its performance on completing tasks it has been assigned by other agents, learning the value of these tasks to those agents through reinforcement learning. For our final contribution, we combine these algorithms to provide a holistic solution to the problem of task and resource allocation in dynamic environments, while also extending it to make it more robust to environmental perturbations such as communication disruptions and harsh weather conditions.

We evaluate these contributions individually, through the simulation of different representative systems, before evaluating our holistic solution through a realistic case study in an ocean-based environmental monitoring system.