“I’ve got news for Mr. Santayana: we’re doomed to repeat the past no matter what. That’s what it is to be alive.” - Kurt Vonnegut Jr

Whether its passwords to access external service, API keys, or other forms of credentials, we not only know that our applications need them, but we also know that they are in reality, highly likely to be exposed beyond the security boundaries we define for them. Most commonly the exposure will come from a human error. Keys committed to a GitHub repository 1,2, incorrect permissions on an S3 bucket 3,4,5 and so on.

Despite the never ending line of historical examples that tell us that the problem lies elsewhere, we still have a tendency to ‘blame the human’. Blame a procedural problem. It wasn’t followed, it wasn’t correct, somebody didn’t do the right thing at some point when doing it for the first, or thousandth time. It’s an easy way of off-loading blame and responsibility, side-stepping difficult challenges, and failing to tackle them at root.

Ad infinitum

Human beings are not designed for flawlessly repeating actions over long periods of time. Cut and paste errors are as common as security incidents as they are in coding. The most scripted and prescribed tasks are the ones that become the most ingrained in our brains, a step by step procedure that is designed to protect secrets as we manipulate them and move them around becomes imprinted in our minds as we perform it frequently. The brain moves it into the background, and it loses sharp focus. If the task is continuous, frequent and repeated, mistakes are introduced through the increased frequency of actions combined with the natural error rate. Or its a less frequent, procedural task, where errors are a natural outcome of both the rate we forget, and our lack of awareness that we have 6,7,8.

The need-to-know

One of the core principles in security is the need-to-know. Think of your secrets, which people need to know what, and why? While we see a strong sense of restricting access to secrets within the cloud engineering profession, its often driven off course by our own biases. That access to credentials should be strictly constrained to those that need-to-know is a given. But unfortunately we then go on to place everyone else in the exclusion zone, and ourselves firmly in authorised one. This might come out of a feeling that we need to be able to access everything to fix, to protect it. There are also signs that our choices sometimes come simply out of our own need for importance. I can see secrets and you cant, therefore I am important.

Who needs to know?

Arriving at the wrong conclusions from what feels like all the right logical steps is a classic sign of starting of with the wrong question in the first place. Instead of asking ‘who needs credentials to access our systems and applications?’, ask ‘what do we need to do that needs credentials?’, and, crucially, get rid of the need to do it. For instance,

‘We need to access instances to see logs’ -> ‘We need to ship all logs’

In the world of cloud-native ephemeral servers and containers, this should be second nature now.

‘We need to log in to instances to fix things’ -> ‘We need to automate ephemerality’

We don’t always find making the conceptual switch to cloud-native services easy. We can help ourselves however by enforcing compute termination on a regular basis 9. Theres a myriad of other robustness benefits to this too, gaining resilience and unbroken service health by engineering constant resource breakage and destruction throughout its lifetime.

What needs to know?

In a cloud environment we can shift our questions increasingly away from humans and manual processes into automation and pure-machine systems. Now we can ask much more productive questions about what needs access to complete its function in our system rather than have a who at all. If a server is the only component that needs access to a database, then that should be the only actor that can ever access that database by definition. We are now thinking of system components being authorised to take actions, in the best case scenario, using IAM roles and policies as our prime and only source of authentication and authorisation rather than utilising credentials at all.

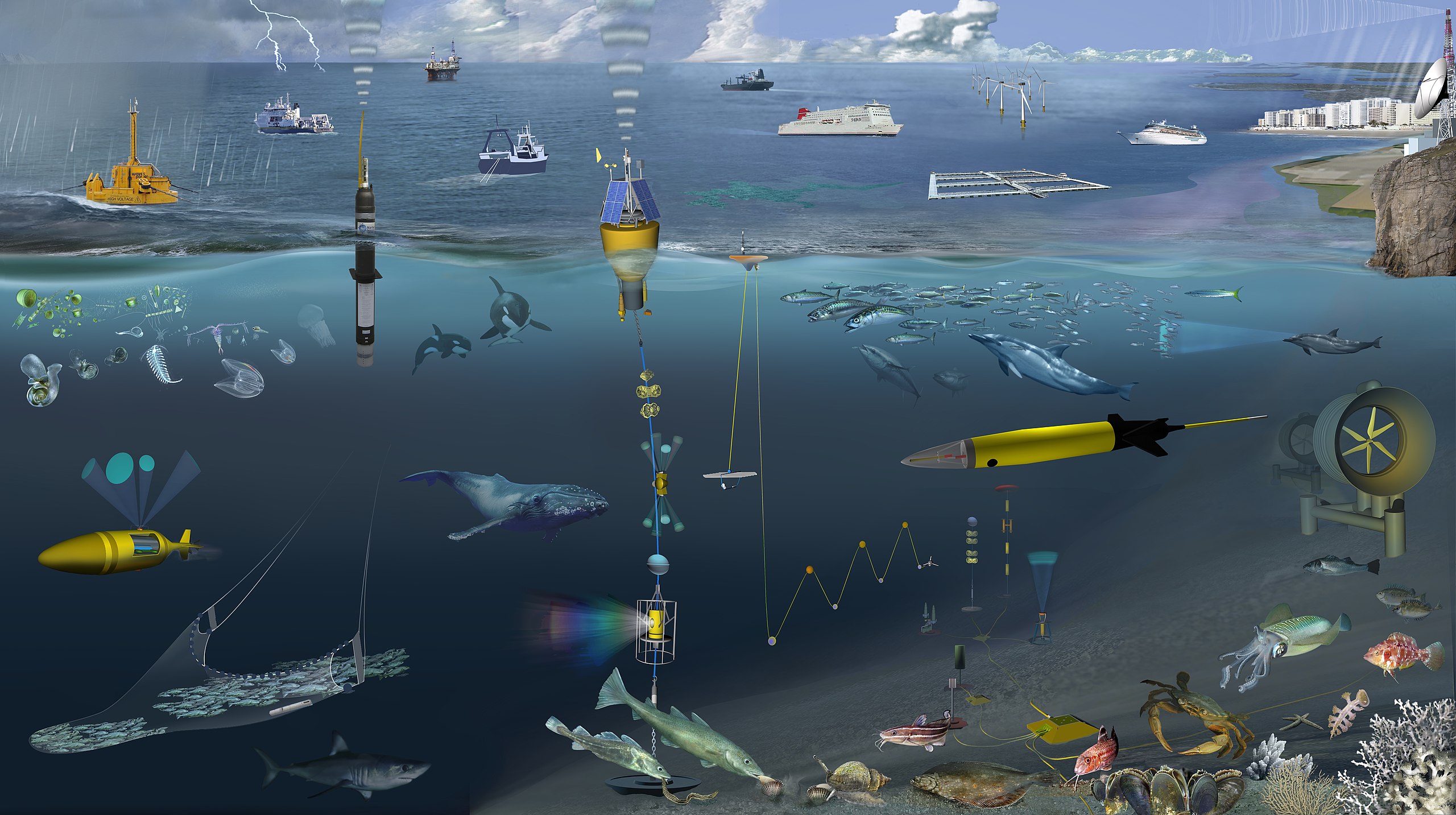

Security and the machine landscape

Given a cloud system, and the variety of tools and designs we can utilise, what are the kind of approaches to secrets management we see in the real-world? Just as containers or serverless are not necessarily options for solving some problems we come across, not all the scenarios below will be available to us when we come to design our credentials management approach. In reality, ‘perfect’ decisions are not always open to us but instead are compromised by existing criteria or requirements. However, from a principled standpoint we can see the benefits and risks of each way of doing things, and hopefully find that internalising all secrets to machines-only is realistic in many more cases than we think, if we’re ready to let go of human-control.

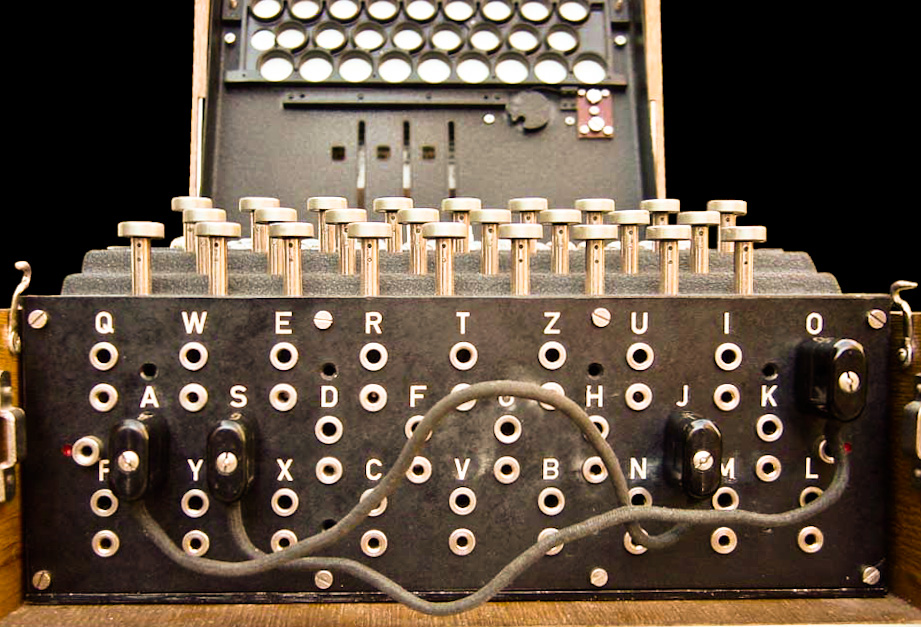

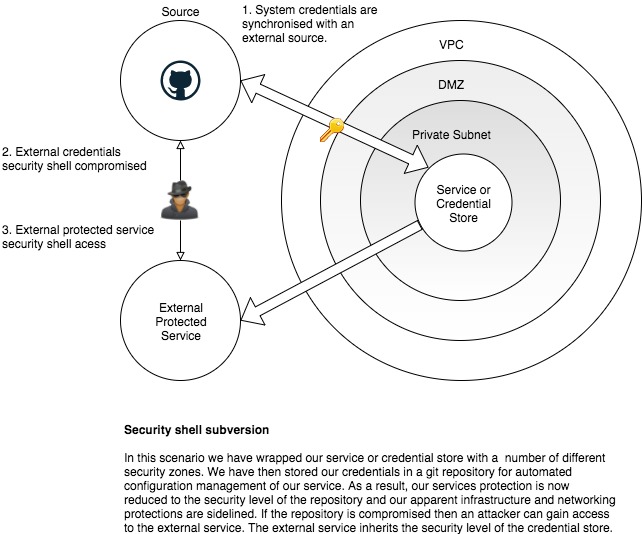

Credentials as configuration

This traditional approach uses the simple synchronisation of some external configuration with the system state. Whether through Puppet, Chef or Terraform the aim is the same. The configuration and credentials are defined in our human-controlled files, and should represent the exact same state as the real-life system. Commonly this is paired with an infrastructure pipeline so that each peer-reviewed commit to a repository passes automatically through a CICD pipeline and alters the real system and infrastructure 10.

Pros

- Our entire system is defined purely in configuration

- We can automate the pipeline such that we manage and revert the system state purely through git commits.

Cons

- We migrate our security risk from internal to the system into an external security shell, which we must then protect at the same level as the highest level security shell of our system.

- Exposing secrets through repositories and errant commits is common

- Promotes the reuse of credentials and the recreation of systems using stale ones.

Black-boxing credentials

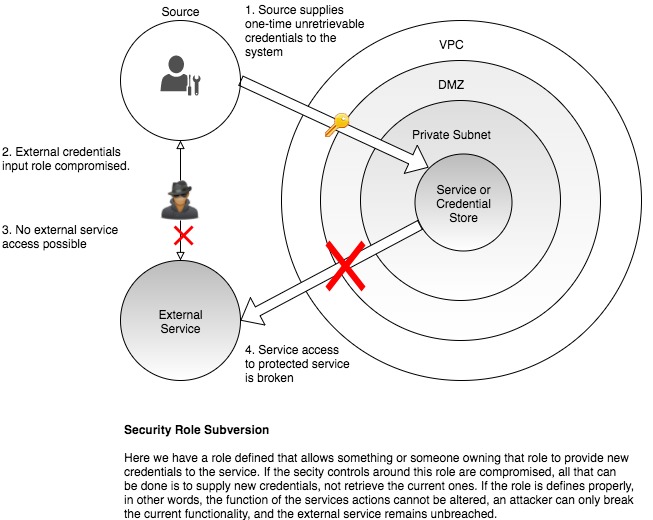

When we talk about black-box credentials we’re talking about one-time, irretrievable secrets. The principle is simple, we can’t recover secrets, ever. We pass secrets in to our system and they are held within that system and its composite security layers, never to leave. Another system or human may possess a role that enables them to create and pass in credentials to the system, but they may not see any that already exist. The role is the point of control 11.

Pros

- Our secrets are not held external to the system, compromising current secrets requires breaching all the security shells of our system.

- Attackers can only subvert the role, and not the actual credentials. This means that they may break the system, but they may not compromise the target of the systems credentials unless it is combined with other distinct attacks.

- Actively works against credential cut and paste reuse.

Cons

- The role requires the owner to have other roles that allow it or them to create the external credentials that need to be supplied to the system.

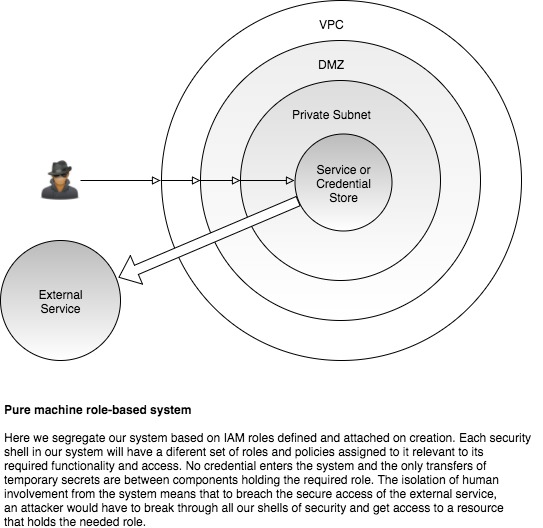

Pure machine systems

With modern, virtualised cloud services designed with higher level cloud abstraction principles in mind, there is often no reason to enable human interactions with the internal system. We design the infrastructure, behaviour, and functionality and then allow the system to perform its actions. Reactive live-service actions are not human triggered, but the signal and response architected, applied to the the system, and left to the system to trigger. A simple example of this paradigm is the scaling of systems compute resources to traffic. No manual triggering is performed. The signal is tuned for, CPU, network load, whatever the case. The response designed, scaling out instances or example. And this pattern applied to the system, which then responds without any intervention. Another might be the automation of instance termination on receiving a number of 500 responses. We set the threshold and response, but leave it to the system to react to the live events without our prompting.

The assignment of IAM roles to groups of resources allows this human-designed, machine-live separation to be extended into authentication and authorisation control. We now remove supplied credentials from the design altogether, and replace what you have with what you are within the system. We architect the systems roles and policies so that we can assign the right to be authenticated to resources. This right was assigned in the black-box system to the external third party, the ability to generate and use credentials. Here, we move it entirely within the system and embed it within machines. IAM-only access to an RDS Aurora database within AWS, or allowing access to VPC endpoints within a subnet are good examples of this approach 12,13.

No non-batteries required

In a modern cloud native environment, its possible to place credentials inside those ecosystems and protect them with roles rather than passing and managing credentials at all. Although not all services are easily fitted into this ethos, we can see through IAM RDS access and VPC endpoints how we can access the majority of our functionality. In addition, we could populate AWS Parameter Store with any other credentials and enable roles to access those namespaces 14. Doing things this way enables credential management and access to be a design task, not a live procedure. Resources are preassigned the roles they need, to access the secrets they need to enable their functionality, and no more. Things that dont require access to function, never get it or indeed know those secrets exist in the system.

When we review where humans fit in to all this we are in reality left with two main points of control. Firstly, the defining and allocation of IAM roles to resources at the design stage. Secondly, we need to assign groups of users their own role, the ability to put credentials into the cloud system, a black-box where those credentials will live sealed away from any further human access.

Through this internal sealing of credentials inside the cloud ecosystem, the shift towards role based authentication, and the reduction of human involvement to be inputs to the environment only with no ability to output secrets, we can make secure secrets management much more of an engineered function of the system rather than a procedural one reliant on our best efforts practices.